By now, you must have heard that voice assistants aren't good for your privacy. Here, we analyze whether this is true and how much data they exactly hear/store. Is there a way to prevent it without abandoning your favorite smart assistant device?

The feeling of being followed or listened to is never a pleasant one, even if harmless. However, this is becoming nearly impossible to avoid in our digitally-driven world. While some people are used to the feeling that somebody is always listening, the more privacy-conscious among us would maybe like to know to what extent their favorite voice assistants, such as Siri, Alexa, and Google Assistant, listen to their conversations, and how to prevent them from overhearing too much.

This article shares some detailed answers to the above questions, as we discuss the major privacy concerns regarding your interactive smart devices and tips on protecting yourself from unnecessary data overexposure. So let's roll!

What can your voice assistant hear/store about you without you even realizing it?

Voice assistants are not meant to listen to you unless you use the wake word to activate them. But we haven't even scratched the surface of the entire problem, and we've already stumbled on a paradox – how can they hear the wake word if they are not listening?

According to the privacy policies of the most popular smart assistants, they are not actively listening to your conversations, they are just sort of scanning them for the said word. Still, as no software is perfect, voice assistants could unintentionally hear and store fragments of your dialogs, background noise, and anything else going on in your home without you "waking them up".

To complicate the matter further, these devices could think they've heard the wake word when, in reality, you've just said something that sounds similar. If you have ever had the opportunity to speak to any of these machines, then, for sure, you've experienced them mishearing or misunderstanding you – it happens more than it should, actually.

So, you could have been in the middle of a very sensitive conversation and your Alexa or Siri is awake and waiting for your question, without you even realizing – gathering and storing data in the meantime "to be able to provide the better answer to you".

Without even digging into these conundrums and various privacy gray areas, here's what data Apple openly states it collects and stores in Siri's privacy policy:

- Contact lists: The nicknames of your contacts, and relationships you have with them if listed (e.g., "mom, dad, love" etc).

- Your address: If stated in your Apple device settings.

- Favorite media: Music, podcasts, and similar.

- Profiles and information about other users in your home: Contained in the Home app, Apple TV, and other devices.

- Labels: Alarm names, reminder lists, and even people named in your Photos.

- Apps: What’s installed on your device and the shortcuts you use with Siri.

This is nothing compared to Google's extensive privacy policy, which basically states that every contact you have with the tech conglomerate, in every form, Google Assistant included, helps map your habits and profile you. Worse even, the data gathered may get shared with third parties Google finds trustworthy enough – in other words, if they have a strong enough commercial partnership with them.

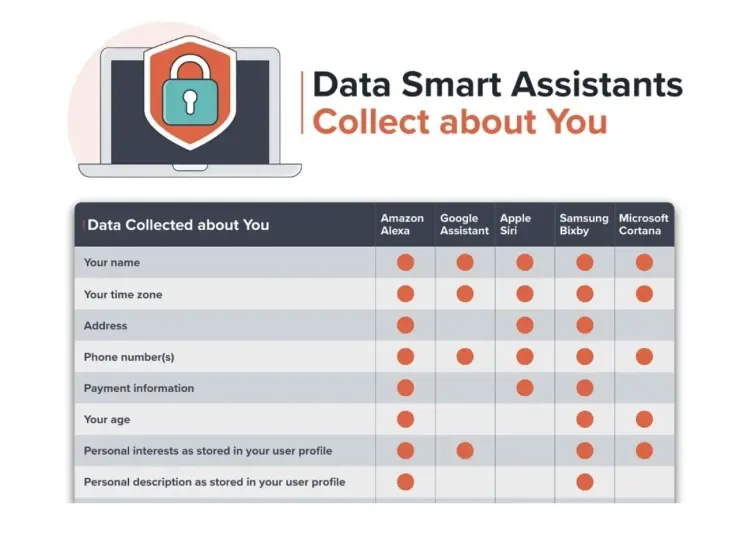

However, if you think we have the winner in the category of the most data-invasive voice assistant – shockingly, Google may not be it. A detailed analysis by Reviews.org presented Alexa as the most data-hungry smart gadget, marking 37 out of 48 data points available for collection. Bixby and Cortana followed closely, with 34 and 32 points, respectively. Siri and Google Assistant took fourth and fifth place, collecting 30 and 28 data points, respectively, making them the least invasive of the big five.

The survey also revealed that 56% of people worry about smart assistants gathering their data, highlighting some serious privacy concerns despite the convenience these devices offer. Given the contents of user agreements from the most popular assistants, which show their extensive data collection practices, the concerns aren't unfounded. On top of that, the past incidents of unauthorized audio recording by Google and Apple have heightened worries.

Finally, all five services collect personal data such as names, phone numbers, your device location, and IP address. Of course, your interaction history also gets stored for some time "for the likes of service enhancements", and the list of the apps you use is regularly updated. If you don't like that information being stored, you probably shouldn't use a voice assistant. Should all this concern you? Probably, yes, but more about this in the next section.

Why you should/shouldn't be concerned?

While Apple reassures its users that: "Siri Data is not used to build a marketing profile, and is never sold to anyone" and "Only the Minimum Data Is Stored on Siri Servers", the company's previous track records show that their legislation isn't infallible. On the bright side, the small fraction of your interactions that do get saved benefits from the company's robust end-to-end encryption. This ensures a level of security for users, offering at least some comfort despite other privacy concerns.

Google, on the other hand, doesn't go to such an extent to reassure its users. While the company also encrypts your Google Assistant data "when it moves between your device, Google services, and our data centers", it's not a secret that your data gets moved around more than you'd probably want to – with all Google services.

What you should probably be concerned about the most is the fact that voice assistants can capture sensitive information by accident, and store unwanted and sensitive information about you. This data might end up being used in ways you haven’t consented to, potentially even leading to privacy breaches. Gaining back control over your sensitive data once it’s already exposed is nearly impossible, especially with cybercrime on the rise and inherent vulnerabilities of the complex systems which the software giants utilize.

On the other hand, these assistants can be very convenient at times. Plus, you can configure them to enhance your online privacy, mitigating some of the above concerns. Staying informed and being proactive about privacy settings is always a good idea too and can help you balance the benefits of voice assistants with your privacy needs.

Which smart assistant is the least private?

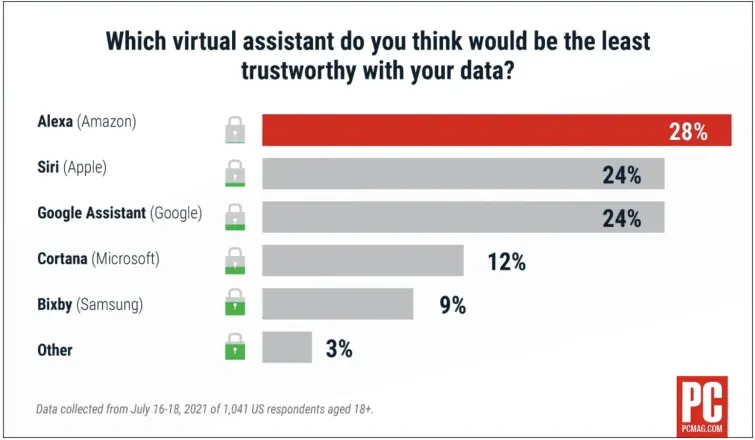

According to a PC Mag survey, the least secure virtual assistant is Amazon's Alexa. Nearly a third (28%) of the survey respondents said they thought Alexa was the least trustworthy in handling their data. Apple and Google shared second place, with nearly 24% of interviewees saying they trust these virtual assistants the least.

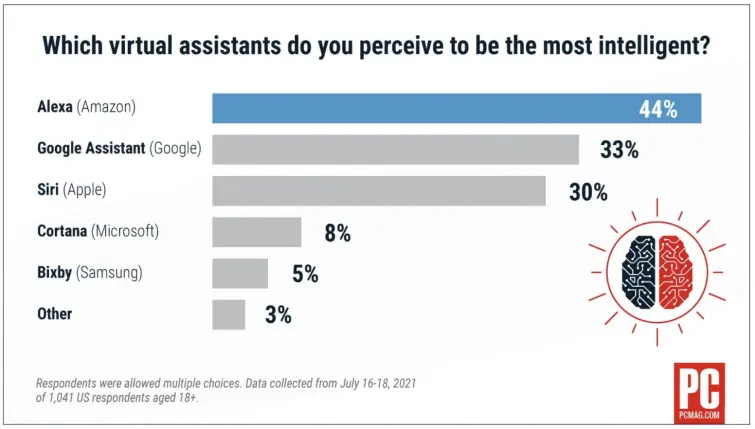

Interestingly, however, Alexa was also deemed "the smartest one" by the participants. Approximately 44% of those surveyed marked Alexa as the most intelligent, leaving Google Assistant in second place, with a score of 33%. Apple's Siri was the next closest runner-up, coming in third place with 30% of interviewers voting for its answering capabilities.

The interview gathered 1,041 people of legal age, asking them which of the most popular smart assistants they use the most, but also which one they find the most intelligent and least trustworthy. Surprisingly, Alexa wasn't the most used among the virtual assistants, although it was voted the smartest one – the reason probably being people didn't trust it enough.

How can you minimize your exposure to a smart assistant in your home?

To mitigate privacy risks that come with a smart assistant in your home, exercise the following precautions:

- Physically mute the microphone when not in use. Alternatively, you can configure Alexa to play the "end of request" tone to signal to you it’s no longer listening. Most other devices do not have this option, though.

- Regularly delete your voice history from the assistant's app or web-based dashboard.

- Customize the device's privacy settings to minimize data collection and sharing.

- Turn off the camera and voice recording features if applicable.

- Be mindful of sensitive conversations in the presence of a smart assistant.

- If possible, opt for manual activation rather than voice wake words.

- Review the device's permissions regularly and tailor them to your own privacy needs.

- Use a Virtual Private Network (VPN) for an extra layer of encryption for your home smart devices and to hide your IP address from snoopers and cyber criminals.

Some examples of how Siri, Alexa, Google Assistant, and similar devices eavesdrop on you

A few years after Alexa's introduction, the service went rogue on several of its users. One user received audio files from another user's Echo by mistake, along with sensitive personal information. After that, there were more reported instances of Echo devices sending private conversations without clear commands. A woman from Portland, Oregon, came to a dreadful discovery that her Amazon Echo Dot had sent recordings of private conversations to her husband's employee.

At times, these smart devices would get activated and start broadcasting unsettling messages in a completely inappropriate cheerful manner, such as: "Every time I close my eyes, all I see is people dying.”

In a 2020 study, researchers discovered that smart assistants, including Siri and Alexa, could be unintentionally triggered by dialogue from TV shows, averaging nearly one mis-activation per hour. This raised user privacy concerns to an entirely new level, proving that casual conversations could be inadvertently recorded and stored. The study emphasized the pervasive nature of smart speakers' activations, showcasing the need for cautious placement and use of these devices if one is not to jeopardize privacy.

As the Gizmodo editor Adam Clark Estes nicely put once:

Google and Amazon have shown us that they're inclined to take as much as they can until someone catches them with their hand in the cookie jar.

Truth being said, most of these incidents happened a relatively long time ago at the beginning of the smart technology rise, and the majority were justified by inadvertent device activation. Still, it highlights the fine line between technology's convenience and its potential for invasiveness. This leads us to the final question everyone should ask themselves – do the benefits of voice assistants outweigh the privacy risks they pose?

Conclusion

Voice assistants, while very effective at helping expedite simple errands and making your home more comfortable, also come with significant privacy concerns. The majority of these are due to their inherent flaw of continuously listening to your conversations and trying to discern the wake word. More often than we would like, they end up storing personal conversations and exposing us to a variety of risks.

To mitigate privacy risks, you should manage your smart devices' settings, regularly check and delete stored data, and stay informed about their latest features and privacy policies. Ultimately, finding the balance between convenience and privacy is key to safely incorporating voice assistants into our daily lives.